I have been wanting to write a blog post for a while and an issue I ran into this week seemed like the perfect opportunity.

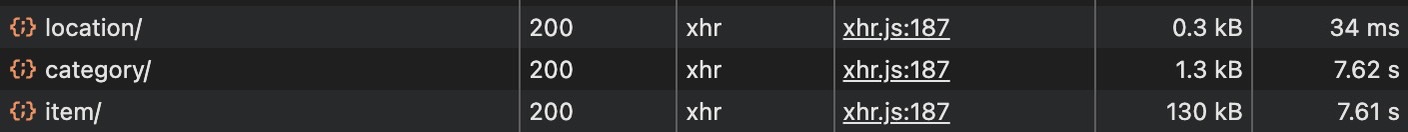

Recently I have been working on a Django based Inventory Management System and ran into a performance issue causing the application to be effectively unusable. The system was experiencing severe performance degradation when listing items, with response times in the dev environment exceeding five seconds and in some cases in production exceeding a whole minute (which meant those requests timed out and the user probably refreshed the page 3 times before giving up).

As any sane developer would I started by running a profile on the code and found that we spent a lot of time making SQL requests. Because of the profile, I decided to log the SQL queries that were being run and found that we were running hundreds of SUM() queries on every page load. This was due to the way we were calculating the quantity of items in the inventory.

The Problem: Gotta sum this up

Our models used a generic relation to store transactions for both items and item variations. Each model provided a quantity property that executed two aggregate queries:

@property

def quantity(self):

inbound = (

self.transactions

.filter(from_location__isnull=True)

.aggregate(sum=Sum("quantity"))['sum'] or 0

)

outbound = (

self.transactions

.filter(to_location__isnull=True)

.aggregate(sum=Sum("quantity"))['sum'] or 0

)

return inbound - outboundWhen listing one hundred items, Django fired that snippet twice per item. That meant two hundred queries on the inventory_transaction table for just a single page load which was quite frankly unacceptable.

Initial Refactoring: How about one?

I first rewrote the way we calculated quantities to reduce the number of queries. Instead of executing two separate aggregates for each item, I pushed all the summing into a single SQL statement by using conditional aggregates in an annotation:

from django.db.models import Sum, Case, When, IntegerField, F

items = (

Item.objects

.filter(active=True)

.annotate(

total_in=Sum(

Case(

When(transactions__from_location__isnull=True,

then='transactions__quantity'),

default=0,

output_field=IntegerField(),

)

),

total_out=Sum(

Case(

When(transactions__to_location__isnull=True,

then='transactions__quantity'),

default=0,

output_field=IntegerField(),

)

),

)

.annotate(current_quantity=F('total_in') - F('total_out'))

)This change collapsed hundreds of queries into one. It improved response time from five seconds to around one second. But at scale, scanning the entire transactions table on every request still took too long, especially with high transaction volumes. I needed a way to get sub-millisecond reads.

Denormalization: Maybe just store it?

A simple solution to this problem was to denormalize the data. Instead of calculating current_quantity on the fly, I decided to store it directly in the Item and ItemVariation models. This way, I could read the current quantity in constant time without any joins or aggregates.

So a quick migration later I had something that looked like this:

class Item(models.Model):

# existing fields ...

current_quantity = models.IntegerField(default=0)Since these queries were no longer aggregates, we saw huge performance improvements. Listing items now took under ten milliseconds, even with thousands of items.

But this introduced a new challenge: how to keep current_quantity in sync with the transactions table, which was still the source of truth for inventory movements.

Keeping Data in Sync: Signals to the rescue

I needed to keep current_quantity accurate whenever transactions were created, updated, or deleted.

To achieve this, I used Django's signals to listen for changes to the Transaction model. Whenever a transaction was created, updated, or deleted, I would adjust the current_quantity field accordingly.

from django.db.models.signals import pre_save, post_save, post_delete

from django.dispatch import receiver

from django.db.models import F

@receiver(pre_save, sender=Transaction)

def cache_old_delta(sender, instance, **kwargs):

if instance.pk:

previous = sender.objects.get(pk=instance.pk)

instance._old_delta = delta(previous)

else:

instance._old_delta = 0

@receiver(post_save, sender=Transaction)

def update_on_save(sender, instance, **kwargs):

diff = delta(instance) - instance._old_delta

if diff:

model = instance.good_type.model_class()

model.objects.filter(pk=instance.good_id)

.update(current_quantity=F('current_quantity') + diff)

@receiver(post_delete, sender=Transaction)

def update_on_delete(sender, instance, **kwargs):

diff = delta(instance)

model = instance.good_type.model_class()

model.objects.filter(pk=instance.good_id)

.update(current_quantity=F('current_quantity') - diff)This was a simple way to keep the current_quantity field in sync while just adding a single indexed UPDATE statement per transaction. This meant we didn't have to change any external interfaces or make large logic changes to the codebase.

Squeezing Out More Performance: Indexing

Because each transaction write or delete now updates an item row, I decided to add indexes to the Transaction model to speed up these updates. The good_type_id, good_id, and from_location_id/to_location_id fields were the most queried, so I added composite indexes on these columns.

This helped bring write times down to the low milliseconds, even under heavy load.

The TLDR

- Read performance: listing items now reads a single integer column. No joins or aggregates. Response times are under ten milliseconds, a nearly 30x improvement.

- Write performance: each transaction issues one indexed

UPDATE. MariaDB handles it smoothly. - Simplicity: signal-based syncing is straightforward. No codebase overhauls, just a few signal handlers to keep

current_quantityaccurate.

Would love to hear your thoughts on this approach and would greatly appreciate any feedback or suggestions for improvement (P.S. This is my first blog post).